Clarivate is trusted by many organizations involved in research evaluation and assessment – including universities, governments, research assessment and ranking organizations globally to provide accurate, verifiable and trustworthy data.

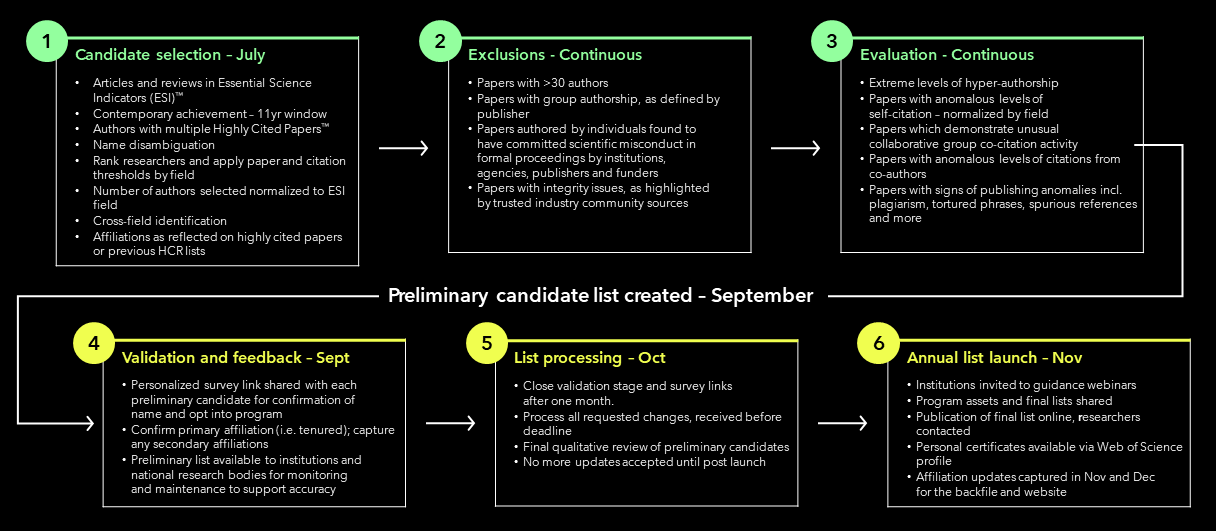

As we identify individuals who show significant and broad influence in their chosen field or fields, we have added more filters and checks to our analysis. Our evaluation and selection strategy is not one-dimensional, the process is more complex than ever and determined by combining the inter-related information available to us.

Some decisions are straight-forward – to award credit to a single author among many tens or hundreds listed on a paper strains reason. Therefore, we eliminate any Highly Cited Paper with more than 30 authors or explicit group authorship as defined by publisher, from our analysis. Beyond this, researchers found to have committed scientific misconduct in formal proceedings conducted by a researcher’s institution, a government agency, a funding agency, or a publisher cannot be selected as a Highly Cited Researcher.

ESI field of Mathematics

We have chosen to exclude the Mathematics category from our analysis for this year.

The field of Mathematics differs from other categories in ESI. It is a highly fractionated research domain, with few individuals working on a number of specialty topics. The average rate of publication and citation in Mathematics is relatively low, so small increases in publication and citation tend to distort the representation and analysis of the overall field. Because of this, the field of Mathematics is more vulnerable to strategies to optimize status and rewards through publication and citation manipulation, especially through targeted citation of very recently published papers which can more easily become highly cited (top 1% by citation). This not only misrepresents influential papers and people; it also obscures the influential publications and researchers that would have qualified for recognition. The responsible approach now is to afford this category additional analysis and judgement to identify individuals with significant and broad influence in the field.

Because Clarivate is trusted by global organizations for research evaluation and assessment, we have a responsibility to provide accurate, verifiable, and trustworthy data. At the Institute for Scientific Information, we must make difficult choices in our commitment to respond to threats to research integrity across many fields. Our response to this concern has been to take advice from experts and consult with leading bibliometricians and mathematicians to discuss our future approach to the analysis of this field.

Upholding research integrity

Together with our community partners, we need to play our part to respond to a rise in threats to research integrity in many areas. So, we examine for any anomalies in the scholarly record which may seriously undermine the validity of the data analyzed for Highly Cited Researchers. These activities may represent efforts to game the system and create self-generated status.

In 2022, with the assistance of Retraction Watch and its unparalleled database of retractions, we extended our qualitative analysis to all retracted papers to detect for evidence of cases in which a potential preliminary candidate’s publications may have been retracted for reasons of misconduct (such as plagiarism, image manipulation, fake peer review). We searched for evidence of publication anomalies for those individuals on the preliminary list of Highly Cited Researchers. This extended analysis proved valuable in identifying researchers who do not demonstrate true, community-wide research influence.

We also receive expressions of concern from identified representatives from research institutes, national research managers and our institutional customers along with information shared with us by other collective community groups – e.g. For Better Science, Pub Peer. Some of these resources include anonymous or ‘whistleblower sources. We also consider these, where we can verify claims through direct observation.

Our response evolves each year, and we now look at a growing number of factors when evaluating papers including, but not limited to:

- Extreme levels of hyper-authorship of papers. Our expectation is that an author has provided a meaningful contribution to any paper which bears their name and the publication of multiple papers per week over long periods strains our understanding of normative standards of authorship and credit.

- Excessive self-citation – We exclude papers which reveal unusually high levels of self-citation. For each ESI field, a distribution of self-citation is obtained, and extreme outliers (a very small fraction) are identified and evaluated. We also look for evidence of prodigious, very recent publications that represent research of incremental value, accompanied by high levels of author self-citation. For a description of the methodology used to exclude authors with very high levels of self-citation, please see: Adams, J., Pendlebury, D. and Szomszor, M., “How much is too much? – The Difference between Research Influence and Self-Citation Excess,” Scientometrics, 123 (2):1119–1147, May 2020.

- Unusual patterns of collaborative group citation activity and anomalous levels of citations from co-authors. The identification of networks of co-authors raises the possibility that an individual’s high citation counts may be highly reliant on citations from this network; if more than half of a researcher’s citations derive from co-authors, we consider this to be narrow influence, rather than the broad community influence we seek to reflect.

ISI analysts use other filters to identify anomalous publishing activities. We can report, with the implementation of more filters this year, the number of potential preliminary candidates excluded from our final list increased from 500 in 2022 to more than 1,000 this year.

We explicitly call for the research community to police itself through thorough peer review and other internationally recognized procedures to ensure integrity in research and its publication.